Realtime style transfer

- Date: March 2019

- Category: Experiments

- Tags: #2018 #TouchDesigner #kinect

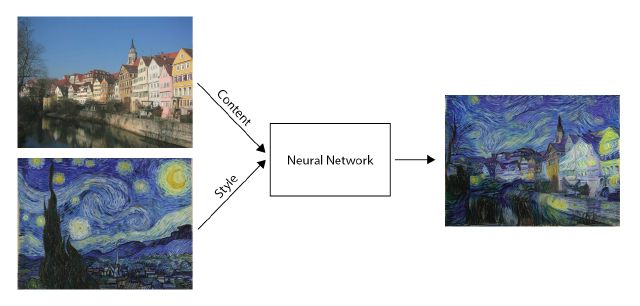

Experimenting with a neural network that performs style transfer in real-time.

How it works: I capture the Kinect video in Touchdesigner, send it via Spout to a console program that performs the style transfer. The result is sent back to Touchdesigner using Spout. I apply the mask provided by Kinect to the result and alter the opacity of this mask using the player’s position on the screen.

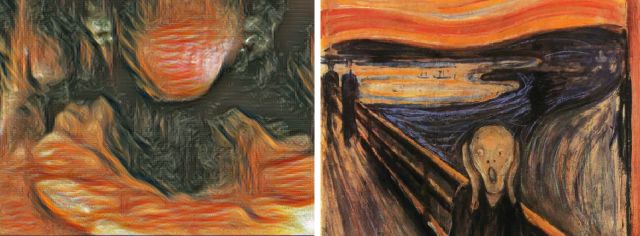

References: For the style transfer, I used Neural VJ with some styles already trained on the network.

Performance: It runs at an average of 8 fps with a resolution of 640×480 on a Nvidia GeForce GTX 970.

Other results